Dr. Stephen Thaler, has for years run circles around ‘Big AI’ companies like Google Deep Mind, Microsoft, OpenAI, Anthropic, IBM and others in designing and conclusively replicating the brain’s neural architecture.

A small, low-profile one-man St. Louis firm that began as a classic American startup like Charles Lindbergh’s centennial “Spirit of St. Louis” aircraft workshop is celebrating a quarter century of building AI synthetic brains that replicate the logic “architecture” of the human brain. What is startling is that Imagination Engines, Inc. (IEI) and its founder, Dr. Stephen Thaler, has for years run circles around ‘Big AI’ companies like Google Deep Mind, Microsoft, OpenAI, Anthropic, IBM and others in designing � and conclusively replicating � the brain’s neural architecture.

As a result, Thaler prototyped and patented a totally transparent, human-safe AGI in 1998: 26 years ago! Meanwhile, his ‘Big AI’ competitors spent $223 billion in 2022 (360 Nautica, 2-2-2024) on AI and R&D, with OpenAI alone getting $10B in 2023 in venture capital, with all these firms racing to build a not-yet-here “artificial general intelligence” (AGI) or ‘superintelligence’: almost three decades after IEI’s breakthrough! IEI has since patented (2019) a 2nd-generation AGI called DABUS (Device for Autonomous Bootstrapping of Unified Sentience) that can handle 600 trillion neurons, eclipsing what its rivals can do with their energy-guzzling teraops and petaops supercomputers. Yet it pulls this off with six high-end Dell PCs!

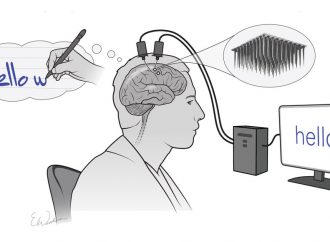

Even more astonishing, DABUS and even its late-1990s ‘Creativity Machine’ and ‘World Brain’ predecessors exhibit ‘sentience’ or ‘consciousness’: the Holy Grail of AI research. How? Because IEI has replicated via electronics and optoelectronics, including LED screens showing every process, the brain’s neurochemicals (serotonin and dopamine), as well as the all-important “seat of consciousness” called the thalamus (what IEI relicates as an ‘alert associative center’) that spotlights promising associative chains that it assembles into broader constellations of meaning, as noted below.

A short review of IEI’s conceptual history will show how it departed radically, starting in 1975, from the mainstream of computing by analyzing the human brain’s logical processes via artificial neural networks’ (ANNs) and replicating them in hardware. The result was what the editor of PC-AI told this writer in 1998 that “Thaler has solved the problem of how the brain functions, he’s the first one to do this, nobody else has, including the Manhattan Project’s [famed mathematician] John Von Neumann.”

Starting in 1975, Thaler has filed 23 ‘fundamental’ patents in ANNs covering brain perception, learning and an unexplored area where he broke new ground: internal imagery of new ideas and plans of action. His replication of the brain’s logic processes in optoelectronics and electronics led to his 2021 paper ‘Vast Topological Learning and Sentient AGI’ (Journal of Artificial Intelligence and Consciousness, Vol. 8, No. 1: https://www.worldscientific.com/doi/10.1142/S2705078521500053), where “novel neurocomputing devices generated new concepts along with their anticipated consequences, all encoded as ANNs in geometric forms as chained associative memories� Such encodings may suggest how the human brain summarizes � its 100 billion cortical neurons. It may also be the paradigm that allows the scaling of synthetic neural systems to brain-like proportions to achieve sentient AGI.”

Indeed, Thaler appears to achieve that with his ‘Device for Autonomous Bootstrapping of Unified Sentience’ (DABUS: US Patent 10423875, 9-24-2019; https://imagination-engines.com/dabus.html). DABUS’s creativity is startling: it has, with minimal training (a dozen vague sketches and photos), produced arresting paintings that would grace any art gallery. It has produced attention-grabbing music from a small set of examples. Above all, it “shows its chops” in autonomously inventing two now-commercial object: a multi-$billion toothbrush and a ‘fractal container’, a “3D snowflake” with remarkable properties, both of which kicked off court battles culminating in a Supreme CFourt verdict as to whether a non-human AI could patent anything. (Only S Africa’s court has said ‘yes.)

DABUS’s future is hugely promising. Thaler’s software has tackled extremely tough problems with a blinding speed, computational efficiency and hardware simplicity eclipsing other AI developers: gesture/motion and target/object classification in microseconds (vs hours and platoons of experts for his rivals); virtual synthesis of novel compound semiconductors and beyond-diamond ultrahard materials (he over-fulfilled the number of candidates for his 1976 Air Force sponsor using printed materials data); materials processing breakthroughs in his 20 years at McDonnell Douglas, where he filed 70+ classified patents; classified intelligence work; and other challenges that stymied his rivals. He outperformed a lavish $20 million NASA space-rendezvous experiment in a 2-hour trial with a duct-taped $50 camera with his patented object-, notion- and scene-recognition neural software.

As a result, DABUS in 2024 appears years ahead of its ‘Big AI’ rivals. Why this yawning gap? Why the massive computing power and the reportedly $100 million training needed for ChatGPT et al � when IEEI’s 600-trillion-neuron system operates with six Dell PCs from Best Buy?

First, rival ‘Big AI’ systems like chatbots don’t need anything complex but rather quick input-output mapping (a transformer), akin to a reflex action for millisecond delivery of results. For if a chatbot ponders its possible responses (which it cannot), its popularity plummets. Also, chatbots handle numerous prompts/second, which would overwhelm systems like DABUS (or even humans). By contrast, DABUS comprises an array of neural networks linked together to encode memories and generate ideas. This is often done using commercial workstations running one segment of the algorithm as a multithreaded application. However, a serious part of the algorithm leans on GPU (graphics processing unit) to swiftly populate flat-panel displays, transforming an aid to humans into an active part of any computation.

Second, DABUS is scalable to extremely large parameters: IEI has managed “to integrate 600 trillion (600,000,000,000,000) parameters” � again, using a mere handful of commercial PCs! In short, there is no problem in the world (if it can be captured in data) that DABUS cannot very quickly address with minimal startup time.

Third, unlike its ‘Big AI’ rivals, DABUS is not a scary “black box” AI that spawns hysteria in US AI policy circles over “Frankenstein AI” that’s “an existential risk to humanity.” While conventional AI production systems, terms and processes are human-readable and thus transparent and understandable, conventional artificial neural systems are different. They operate as black boxes whose network of interconnected neurons with initially random weights are trained to achieve precise mappings. This necessitates serious effort to decipher the rules embedded within these connection weights. DABUS, however, is inherently drill-down-visible with its LED displays and its use of optoelectronics.

Finally, IEI has for decades carved out a ‘lean, mean and hyperefficient’ AI/AGI approach while its rivals have put megabucks into raw power and computing mass. This of course drives the popular perceptions that AI development is measured in these terms. Yet IEI’s synthetic brains have more parameters than the human brain and run on a handful of Dell PCs — while even scaled-down DABUS variants outperform any multi-$B chatbot. Thaler says, “Our system achieves more because it has free will and can use its subjective feelings to generate creative ideas and encode things and events in its surroundings more deeply and accurately.”

In conclusion, IEI is the world’s only AI developer with 50 years of IP in replicating “wet brain” logic functions in state-of-the-art hardware, with 1st-generation and now 2nd-generation “artificial general intelligence” (AGI) and ‘superintelligence’ to its credit. DABUS is unique in the AI industry as an epochal, lightning-fast trainable and fast-moving enabler tackling an expanding multitude of extremely tough problems: climate change; orbital debris mapping and collision avoidance with ~1 trillion objects in low-earth orbit (LEO); global stock market fluctuations; geophysical mapping for oil & gas companies and hard-rock mining; and “brilliant” personalized healthcare. Just imagine what 600 trillion neurons and parameters can do for a short “running start” for such massive, complex problems! Indeed, it may be time to link up with leading academic neuroscientists to further refine DABUS.

Dr. Thaler invites investors to join him in expanding his DABUS AGI system. Compared to rival AI/AGI developers, his hardware runs at far less cost yet hugely outperforms theirs. He is the only AI engineer who from the beginning chose an unconventional approach which none of his peers had the nerve to try. He now plans to expand DABUS’s performance and ease of application.

Leave a Comment

You must be logged in to post a comment.